Understanding Project Requirements:

Project Description: As a Kubernetes Developer, you have to complete the features of the application Scalability with the demonstration of how to create Kubernetes Cluster with auto-scale feature and then the most important thing is how to resize the existing cluster size in order to meet infra requirements of already running application in production, and finally how to auto-scale the application in terms of Replica set and deployments by java development services company.

Operations:

- Automatically you have to resize clusters based on the demands of the workloads you want to run

- Manually resize a cluster to increase or decrease the number of nodes in that cluster

- Scale a deployed application in Google Kubernetes Engine

- Automatically add a new node to your cluster if you’ve created new Pods that don’t have enough capacity to run; conversely, if a node in your cluster is underutilized and its Pods can be run on other nodes, you can delete the node automatically

- Solve underlying infra challenges by utilizing resources that are needed at any given moment, and automatically get additional resources when demand increases.

Autoscaling Concepts and Cluster Resource Management

This below requirement will surely help you to:

- Develop an application that will scale up in terms of ReplicaSet, cluster size, number of nodes, etc. as and when required.

- Develop an application that will scale down in terms of ReplicaSet, cluster size, number of nodes, etc. as and when required.

- Utilize the available resources for large scale applications in terms of utilization & performance parameters.

Section 2: Project Implementation:

Step 1: Create a Kubernetes Cluster

There are various ways of creating a Kubernetes cluster. You can choose the Google cloud or Amazon cloud as per your preference.

Step 2: Cluster autoscaler

- Autoscaling of a Kubernetes cluster provides various real-time advantages. With this feature, we will have to pay only for those resources that are required at any given moment depending upon the workloads, and the auto-scaler automatically adds up the new resources in case of increased demands and it deletes the extra resources when the workload decreases.

- Remember that when resources/pods are moved or added throughout autoscaling our Kubernetes cluster, your application/services may encounter some downtime or disruption. For instance, if your microservice application consists of a controller with only one replica, then a single replica’s Pod may be restarted on an alternate available slave node if its present node gets deleted.

- To enable autoscaling, please ensure that your microservices can endure expected disruption while adding the new resources and also they should be designed in such a way that downscaling should not have an impact on the current Pods and the services won’t get interrupted.

The following steps explain how to use a cluster auto-scaler.

- Create a cluster with autoscaling

2. Add a node pool with autoscaling

Step 3: Resize the Cluster Size

- To increase and decrease the number of Nodes in that cluster according to your requirement, you can manually resize the cluster through the command line.

- One thing to remember that when you increase the cluster size by increasing the number of nodes then the new nodes that are going to be created with the same configuration as the previously existing nodes and the newly added pods may run in new instances but we can not schedule the old pods to run onto a new instance.

- Similarly, when you want to downscale your cluster, then, in that case, those pods get killed which are on that instance and those pods which are managed by the replica controller won’t get killed instead they will be rescheduled onto the other instances by your replication controller.

Step 4: Scaling Application

- When you deploy an application in Google Kubernetes Engine(GKE), you have to define how many replicas of the application you would like to run. By scaling your application, this means you are manipulating the number of replicas either by increasing or decreasing. By each replica what do you mean? Replicas of your application are nothing but the representation of Kubernetes Pods. These pods do what they do is they encapsulate your application’s containers or single container inside it.

- The following sections that I have mentioned here all describe each method you can choose to scale your application. Keep in mind that the “kubectl scale” method is the best way of scaling and also the fastest way. However, there are other methods available and you may prefer another method in some situations. For Example: while updating your configuration files or whenever you perform any kind of in-place modifications.

- You can use this command: “kubectl apply” for modifying the controller’s configuration by applying a new configuration file it. The command “kubectl apply” is essential if you want to make multiple changes to any specific resource and also sometimes the apply command is useful for those users who prefer configuration files to manage their resources in.

- To scale using the command “kubectl apply”, the configuration file you provide as input should include a new number of replicas and different than the previous one in the replicas field of the object’s specification. The below-mentioned file is a configuration file for the “my-app” object. This file shows a Deployment, so if you use another type of controller, such as a StatefulSet, change the kind accordingly. This example really works. You can do the best on a cluster with a minimum of three Nodes.

Inspecting an application

- Keep in mind that, you should not blindly scale your application. Before doing that, you should test your currently running application and make sure that it is running as expected and your application is healthy.

- To list out all your running applications that are deployed to your cluster, run kubectl get [CONTROLLER]. In the place of Controller, you can pass deployments or stateful sets, any of the controller object types. In most of the case, in the Kubernetes world, we do not prefer to create Pods directly, instead, we always take the path of creating a Deployment or Stateful Set or other Controllers like Cronjob and this, in turn, takes care of the Pod Management.

- For example, if you run “kubectl get deployments” and you have already created Deployment. The output after executing this command is similar for all objects but sometimes it may appear slightly different. For Deployments, the output has six columns:

- To list out all the deployments’ names in the cluster, then “NAME” will display those deployments.

- When you create any deployment then in that case you have to define “DESIRED” displays the required/desired number of replicas, or the desired state, of the application.

- Now, next is to list out the running replicas counts at that point of time, then “CURRENT” will do that for us.

- To achieve the desired state that you would have mentioned in the file, then you have to use “UP-TO-DATE” that displays the number of updated replicas and these replicas have been updated to attain the mentioned desired state.

- If you want to list out the number of replicas that are available for your end-users, then “AVAILABLE” will list out those values.

- Another point is finding the age. If you want to see the age of your application, that means how long your application has been up and running, then in that case “AGE” shows the amount of time.

- In this example, we have a deployment web, which has only one replica because its desired state is one replica. You have to define here the “desired state” during the time of creation, and then, you can change the value at any point in time by scaling the application.

Inspecting StatefulSets

- Keep in mind that, before you scale a StatefulSet, you must inspect it by running the below command :

- In the output of this command, check the Pods Status field. Suppose the Failed value is greater than zero, then in this case scaling might fail. If a StatefulSet appears to be unhealthy, run kubectl pods to see which replicas are unhealthy. Then, run kubectl delete [POD], where [POD] is the name of the unhealthy Pod.

- Keep in mind that when the health of StatefulSet is not good then in that case don’t try to scale it because while doing so, it may go into an unavailable state.

config.yaml file:

- In the above file, you can see the replicas field value is 5. So, when we will apply this new configuration file to the existing my-app controller object’s configuration, then what it does is the replicas of the my-app object get increased to five.

- If you want to supply a new config file to apply that updated configuration file, execute the following command:

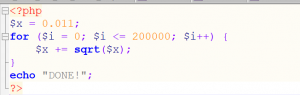

Docker File for creating a sample python application:

Index.php file:

Running the Deployment:

Once you create your docker file and the application, then you have to run the deployment file as below:

Verify whether the deployment is done or not?

With the command “ kubectl get deployment”, you can list out all the current deployments on that node.

Load Generator:

- There are certain times when you would need to give/generate some background load for software stress testing on a cluster.

- Here, what we should do is we will start two infinite loops of commands to our cluster in different terminals just to increase the load

Autoscaling Deployments:

- You can autoscale Deployments using the command: “kubectl autoscale” based on Pods’ CPU utilization behavior. The auto-scaler command creates a HorizontalPodAutoscaler(or HPA) object which is responsible for scaling the “Scale Target” and this “Scale Target” is nothing but a specified resource.

- The HPA(Horizontal Pod Autoscaler)is nothing but used and responsible predominantly for managing periodically the replicas numbers (->either Increasing the replicas or Decreasing) of the “Scale Target”. According to the CPU utilization percentage that you specify through the commands, it will match that average CPU utilization and scale accordingly.

- When you use the kubectl autoscale, you specify a maximum and the minimum number of replicas for your application, as well as a CPU utilization target. For example, to set here, the maximum number of replicas to eight and the minimum to two, with a CPU utilization target of let’s say“50%” utilization, execute the below command:

- In the above command, the “–max flag” is the maximum number of replicas it can scale up to. The “–CPU-percent” flag is nothing but the overall Pods’ CPU utilization of the scaled target. I have passed max value as 8 and min value as 2 in the above command and this does not scale the Deployment Immediately to eight replicas. Whenever the environment needs that means depending upon the CPU utilization state, it will scale up to meet the demand.

- Once you run the “kubectl autoscale” command, then the HorizontalPodAutoscaler object gets created and manages the scaled target as I mentioned already. So, whenever there is any change in the workload, the HPA object scales up or down the application’s replicas.

- To see a specific HorizontalPodAutoscaler object in your cluster, run:

“kubectl get hpa [HPA_NAME]”orizontalPodAutoscaler

“kubectl get hpa [HPA_NAME] -o yaml”

- In this example output, the “targetCPUUtilizationPercentage” field holds the 50 percent value passed in from the kubectl autoscale example.

- Now, inside the cluster, if you want to see the HorizontalPodAutoscaler object’s detailed description, execute the below command:

“kubectl describe hpa [HPA_NAME]”

Note: You can execute command such as “kubectl apply” / “kubectl edit” /“kubectl patch” to modify the HorizontalPodAutoscaler object and for doing so, just pass a new configuration file with any of the above-mentioned commands.

- To delete a specific HorizontalPodAutoscalerobject inside your cluster, run the below command:

“kubectl delete hpa [HPA_NAME]”

Conclusion:

Here I have explained the problem statement and the solution for the underlying infra challenges for deploying any kind of applications to a Cluster. Also, I have covered the auto-scaling and cluster resizing functionalities in Kubernetes.

FAQs:

Subject: Application deployment to Cluster, Kubernetes Autoscaling, and Cluster Resizing:

- What is a horizontal pod auto-scaler in Kubernetes?

- How to raise the load to your server/cluster?

- What are the steps for running the deployment in Kubernetes?

- How to inspect stateful sets in Kubernetes?

- What are the various advantages of autoscaling in Kubernetes?